The How of Macros 1: Elixir compilation

As Abraham Lincoln did not say: those who like this sort of thing (details of programming language implementation) will find this the sort of thing they like.

As I was writing my fifth post in the "writing macros that create functions" series, I thought that it would be good to have a parallel series on the nitty-gritty details of how macros work, on the theory that learning how something works is usually a big help in understanding what it does.

After I'd written drafts for five posts, I came to doubt that. The handling of parse trees for literal data – essential to how macros work – is interestingly (but necessarily) peculiar. Peculiar enough that I've come to wonder whether knowing the details of parse-tree handling is actually useful. It might just be better to stick to the approach of the original series: understand parse trees shallowly, think mostly in terms of the Elixir source, and not fuss too much about why some rule for macro-writing works.

On the other hand... as Abraham Lincoln did not say: those who like this sort of thing (details of programming language implementation) will find this the sort of thing they like.

Warning: Even though this series is about detail, I'll deliberately simplify reality – or, um, lie – when I judge a complete explanation would be more confusing than useful. In my life as an explainer, I've discovered some people really really dislike that teaching strategy. If you're one of them, ... well, you've been warned.

With that said, I'm learning the compiler by writing about it. If I've made incorrect or harmful simplifications, please let me know.

The series: Elixir compilation, syntax trees, literal data, quote, unquote, escape, hygiene and var, what's up with require?

Thanks to Case de Groot for comments on a draft.

We usually think about compilation as being about def and similar expressions within modules. But it's instructive to look at a very simple expression like IO.inspect 5, which can be a top-level expression in a module:

defmodule MacroExamples do

IO.inspect 5

When the compiler is given any module, it will (roughly speaking) compile each expression and then immediately execute it. So IO.inspect 5 will be compiled and executed to print the string "5" to standard output before whatever comes next is processed.

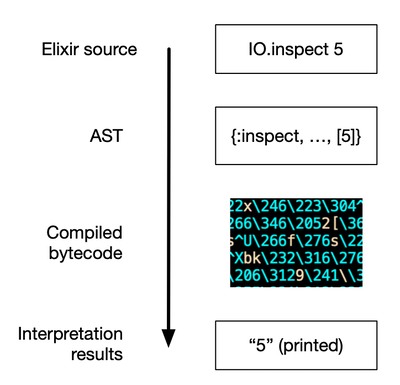

The processing of IO.inspect 5 looks like this:

The first thing the compiler does is parse the Elixir text into an abstract syntax tree (AST). Such trees are built out of core Elixir data structures like tuples and lists. When it comes to compiling, the functions in Tuple, List, and Enum are way more useful than the string-processing functions in String and Regex. The next post describes the structure of syntax trees.

The next step is bytecode generator. Nice as they are for compilers, syntax trees are not useful for other programs, specifically the Erlang virtual machine (usually called "the BEAM"). So compilation next turns a syntax tree into a linear sequence of bytecodes that the BEAM executes (or, more recently, converts those bytecodes into a different linear sequence of machine code that's suitable for the underlying hardware).

The final step is to ask the BEAM to execute the just-compiled instructions. When it does that, IO.inspect prints the string "5".

(Note: a purist might argue that the BEAM interprets; only hardware executes. I'm not going to bother with the distinction.)

Binding maps record name-value mappings

Consider these two lines:

a = 1

IO.inspect aThe first line binds the value 1 to the name a. The second dereferences the name a to retrieve the value 1 and prints it.

There is a point in time after the first line has been compiled and executed, but before the second line has been compiled. Where is the association between a and 1 stored during that time?

When it comes to actual implementation, the answer is complicated. Conceptually, though, we can just say there exists a Map between names and values. I'm going to call such things binding maps. (I'm not using a more common name, "environment", because the word is vastly overloaded in software and, when it comes to Elixir macros, usually refers to a different thing.)

Compiling a non-def top-level expression

There are three different binding maps to keep in mind if you want to understand how the compiler works. The first is what I'm calling the compiler's temporary binding map.

Let me justify that name. Consider this code:

defmodule MacroExamples do

a = 1

...After MacroExamples is compiled, the binding between a and 1 is completely unavailable to any module that imports or aliases or uses or requires it. The binding is temporary, only relevant during compilation.

Furthermore, it's only relevant in a very specific way. Suppose later code in the module looks like this:

...

def return_a(), do: aThat will produce a compile error: undefined function a/0. The body of a def is intended to be executed long after the module is compiled. One programming language design question is: should a binding created during compilation be able to affect execution of code within function definitions? In Elixir, the answer is "no".

(At least when it comes to names bound like a = 1. There's a completely different binding map that manages module attribute bindings like @limit 55. That binding map is best described later, because it works roughly like the quote and unquote special forms used in macros.)

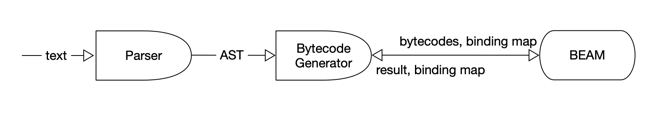

I want to explain how the compiler's temporary binding map is used. To do that, I'll pretend that the parser is an Erlang process that hands an AST to the bytecode generator. The AST comes from a single Elixir expression (like a binding expression or a def or anything that can appear at the top level of a module). The generator creates bytecodes and instructs the BEAM to execute them. The BEAM is another process.

This approach makes handling temporary binding maps easy. When it's time to compile a new module, the compiler creates a new parser and bytecode generator. The bytecode generator is started with an empty binding map. As top-level expressions are compiled and executed, that temporary binding map is passed to the BEAM along with the bytecodes. The BEAM returns a value from the bytecode execution (which, at the top level, is ignored) and a binding map (possibly updated). The bytecode generator maintains the binding map for the next expression. When the module has been completely compiled, the generator process (and, thus, the temporary binding map) is thrown away.

If you're familiar with GenServer (the usual way to implement Erlang processes), you can imagine the bytecode generator implemented like this:

defmodule BytecodeGenerator do

use GenServer

def start(),

do: GenServer.start_link(__MODULE__, %{})

# ---

def init(binding_map), do: {:ok, binding_map}

def handle_call({:make_and_run_bytecodes, parse_tree}, _from, binding_map) do

bytecodes = to_bytecodes(parse_tree)

{retval, binding_map} = BEAM.execute(bytecodes, binding_map)

{:reply, retval, binding_map}

end

BEAM.execute will use values from the binding map if the bytecodes are from an expression like, say, IO.inspect a. It will put a value into the binding map if it's from an expression like a = 5.

Compiling and using an anonymous function

Now let's look at the execution of the following two expressions, again at the top level:

calculate = fn a ->

plus_1 = a + 1

plus_1 * 5

end

a = calculate.(5)You can think of the fn as compiling into a structure that contains the function's arglist (as a list of atoms) and its bytecodes (as a binary). Like this:

%Fn{args: [:a], bytecodes: <<18, 97, ..., 98, 131>>}(This is just a metaphor for what actually happens, but the truth is unhelpfully complicated.)

That structure is added to the compiler's temporary binding map under the key calculate. That in itself is no different than binding any type of data to a variable.

For clarity, I'll rewrite this:

calculate.(5)... to this:

apply calculate, [5]... to emphasize that calling an anonymous function means that some other code is treating the function as data. Below, I show what is essentially the implementation of apply/2, except that I've used the explicit values calculate and [5]:

%{args: args, bytecodes: bytecodes} = calculate

binding_map = Enum.zip(args, [5]) |> Map.new

{retval, _ignored_binding_map} = BEAM.execute(bytecodes, binding_map)

retvalThat is, apply forms a new binding map from only the arguments to the function and gives that to BEAM.execute. That's our second kind of binding map: the argument binding map.

This approach is the reason names in the compiler's temporary binding map can't be used within a function: they weren't made available to the function when it executes. (The compiler knows that, so you get a compile error rather than a runtime error about dereferencing an unbound name.)

Note that any changes made to the argument binding map (for example, by binding plus_1 to a+1 inside the anonymous function) are not allowed to "leak" out of the function. It happens that I'm reusing BEAM.execute in apply. BEAM.execute would return a binding map with entries for both a and plus_1, but that's thrown away (line 3 above). It would be silly to do anything else. What would be next? Global variables? Changing the value of constants?

We're not quite done, as we still have the a=... part of the second line:

a = calculate.(5)Because apply returns a value and throws away the binding map, that's no different than a = 30. That is, the compiler puts the binding into the temporary binding map which – to belabor the point – has nothing to do with the argument binding map used for the calculation.

Compiling a def, or: the definition binding map

def is a macro. I'll simplify how it works and say that the expansion of this:

def add1(a), do: a + 1... is this:

:elixir_def.store_definition(

:def, :add1,

fn a -> a + 1 end)That is: the function being defined is compiled just like any anonymous function. The result is taken to be the third argument of a call to store_definition.

Notice that we've got two sets of bytecodes here. We have the bytecodes from the function being defined, and we have different bytecodes from the compilation of the store_definition call.

When the store_definition bytecodes are executed, the BEAM is instructed to put the argument bytecodes into yet another binding map under the key :add1. Those argument bytecodes are annotated as coming from a def (as opposed to a defp or a defmacro).

I'll call this new binding map – the third – the definition binding map. Unlike the other two, it's a permanent binding map, one that's placed into an on-disk ".beam" file when compilation is finished. During compilation, the compiler will search definition binding maps to produce error messages like this:

** (UndefinedFunctionError) function IO.inspec/1 is

undefined or private. Did you mean one of:

* inspect/1

* inspect/2

* inspect/3

The definition binding map is different than the other binding maps because the key combines both the name and the arity, but I'm going to mostly ignore that distinction. I'll also ignore default arguments, guards, and such, as understanding their details doesn't help to understand macros.

Calling a function defined with def

Most of the conceptual machinery is now out of the way. There are only a few facts about function calls left to cover.

Suppose the compiler is working on this code:

... Config.Reader.read("configfile") ...That is the same thing as this:

apply Config.Reader, :read, ["configfile"]... which looks a lot like the earlier use of apply with an anonymous function:

apply calculate, [5] ... except that it has three arguments instead of two.

The substantive difference is that the value of calculate immediately supplies all the information apply/2 needs. In the apply/3 case, the compiler needs to do a bit more work: it has to find the information using the first two arguments.

That's "a small matter of programming": the ".beam" file for Config.Reader must be read and the definition binding map extracted. Then read/1 has to be used as a key in the map. The resulting value can now be handled as if it were from an anonymous function: it can be passed to apply/2.

Here's a function call without an explicit module name:

... max(1, 2) ...The compiler searches for max/2 in the definition binding maps for all imported modules. It finds it in Kernel's binding map. (Kernel is always imported. ) That match yields the module name, so we're back to compiling this:

apply Kernel, :max, [1, 2]alias works similarly. There's a map that translates aliases into complete module names, then the compiler searches those modules for a match.

About the definition binding map for __MODULE__

But there is one wrinkle I'm ignoring: the definition binding map that's being built during compilation of the current module. That's the one associated with the pseudo-constant __MODULE__. There are two cases.

1. When compiling the body of a function, the compiler will look in the current module's definition binding map before any imported ones. So, for example, Map.fetch! can call Map.fetch and do so by referring to it as fetch (without explicitly naming a module).

2. For top level code, though, the current module's definition binding map is never searched. That's why the following doesn't work:

def add1(a), do: a + 1

IO.inspect add1(5) # undefined function add1/1The above code would work in some Lisps (for example). I don't know the precise reason Elixir doesn't support it. I suspect it's due to complexity I hand-waved away. Despite what I said, expressions aren't compiled and immediately executed. The compiler actually makes more than one pass over the module. That's why, for example, a function body can refer to a function that's defined later in the module. I'm sure that a function definition in the compile-time definition binding map is only "half baked" compared to one read from a ".beam" file, and so is not actually ready to be executed.

Calling a macro

Finally! Talk about macros!

A macro is just a function. It executes just like any other function. What's special is how the compiler uses that function.

Let's start with defmacro. It's a function very like def. You can see that by using Macro.expand on a defmacro expression. You'll see the expansion looks just like the one for def, except that the first argument to :elixir_def.store_definition is :defmacro instead of def:

:elixir_def.store_definition(:defmacro, ...That's a tiny difference, but the effect is profound. Consider this code, where max is an ordinary function in Kernel:

... max(:rand.uniform(3), 2) ...The parser will produce a syntax tree from that text and hand it to the bytecode generator.

The generator finds the definition of max/2 in Kernel's definition binding map. Because it's tagged with def, the use of max will be turned into bytecodes to be executed later.

But let's say store_definition tagged max as a defmacro. The processing is different:

- The function

maxis immediately called by the compiler. Its first argument is not the result of:rand.uniform, but rather the syntax tree for this particular call to:rand.uniform. (That is, it's a nested structure of tuples and lists.) - The macro is expected to return a syntax tree. The bytecode generator pretends it had received that syntax tree instead of the one representing the call to

max, and produces bytecode from that.

So, from the compiler's point of view, it can encounter a def function, which means "produce bytecodes that will someday call that function". But a defmacro function means: "call that function now, and produce bytecodes from the result."

Next: syntax trees